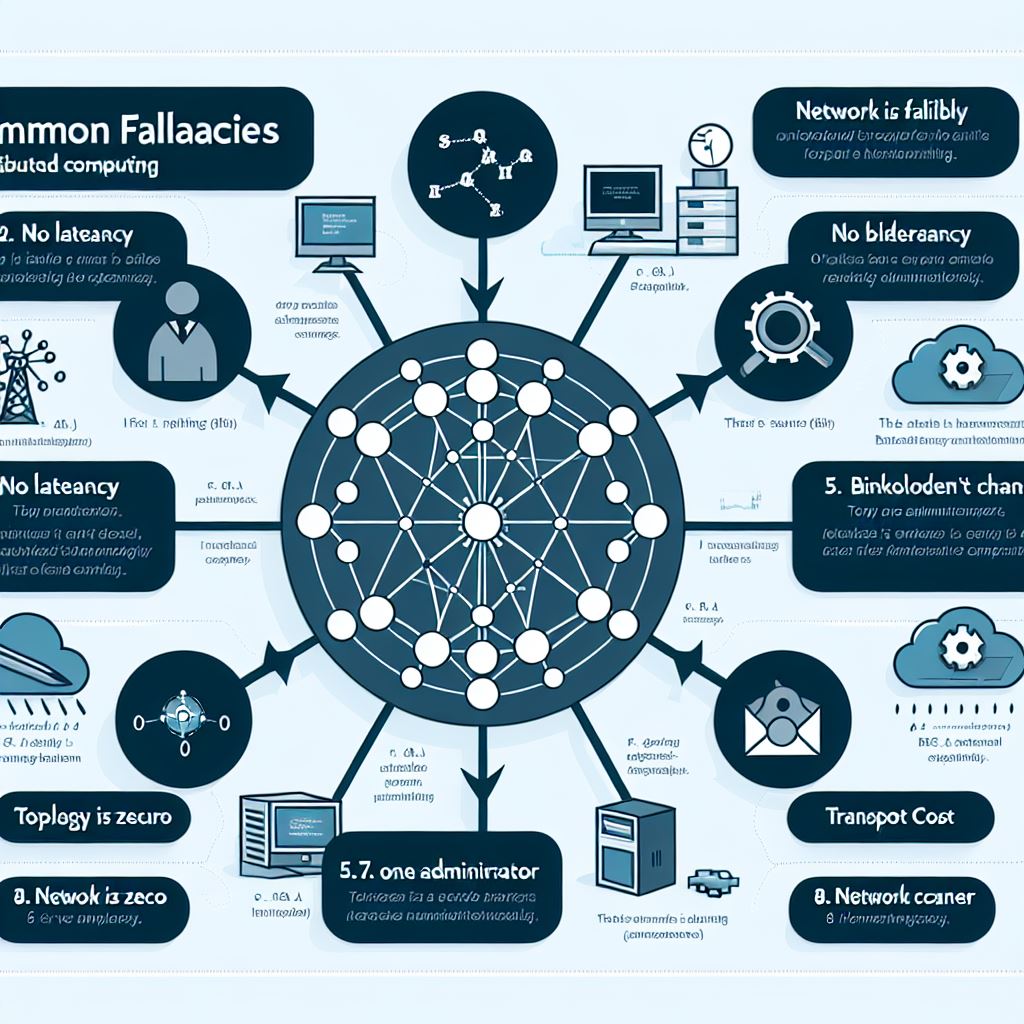

Distributed computing has roots tracing back to the ARPANET and the SWIFT protocol in the late 1960s. Despite the progress, certain misconceptions, known as the "Fallacies of Distributed Computing," continue to challenge architects and developers. Originally identified by Peter Deutsch in 1994 and later expanded by James Gosling, these fallacies highlight the erroneous assumptions that can lead to significant issues in distributed systems.

The Network is Reliable

Definition: The assumption that the network will always function without failures.

Reality: Networks can fail due to hardware issues, software bugs, power outages, and human errors. Redundancy and error-handling mechanisms are crucial to mitigate these risks.

One of the most persistent fallacies is the belief in network reliability. While hardware like switches and routers have impressive Mean Time Between Failures (MTBF), numerous factors such as power outages, physical disconnections, and software failures can disrupt network reliability. For mission-critical applications, redundancy and robust error-handling mechanisms are essential to mitigate these risks.

Latency is Zero

Definition: The belief that data transfer between nodes happens instantaneously.

Reality: Latency, the time it takes for data to travel from one point to another, can significantly impact performance, especially over long distances. Systems must be designed to minimize remote calls and optimize data transfer.

Latency, the time it takes for data to travel from one point to another, is often underestimated. Unlike bandwidth, which has seen significant improvements, latency remains a bottleneck, especially in wide-area networks (WANs). Developers must design systems that minimize the number of remote calls and optimize data transfer to account for latency.

Bandwidth is Infinite

Definition: The assumption that there is unlimited capacity for data transfer.

Reality: Bandwidth is limited and can be constrained by factors like packet loss and frame size. Applications need to be efficient in their data usage to avoid bottlenecks.

Although bandwidth has increased over the years, the demand for data-intensive applications like video streaming and VoIP continues to grow. Additionally, packet loss and frame size limitations can further constrain effective bandwidth. It's crucial to design applications that are mindful of these limitations and optimize data usage accordingly.

The Network is Secure

Definition: The belief that the network is inherently secure from threats.

Reality: Networks are vulnerable to various security threats, including attacks and unauthorized access. Security must be built into the system from the beginning, with multi-layered defenses and continuous monitoring.

Security remains a significant concern in distributed systems. Despite advancements, many networks still rely on perimeter defenses, leaving them vulnerable once these defenses are breached. Comprehensive security measures, including threat modeling and multi-layered defenses, are necessary to protect against various threats.

Topology Does not Change

Definition: The assumption that the network structure remains static.

Reality: Network topology is dynamic, with changes in server locations, client connections, and routing paths. Applications should be designed to handle these changes gracefully.

Network topology is dynamic, with servers being added or removed and clients connecting from various locations. Applications must be designed to handle these changes gracefully, using techniques like location transparency and discovery services to adapt to the evolving network landscape.

There is One Administrator

Definition: The belief that a single administrator manages the entire network.

Reality: Large networks often have multiple administrators with different responsibilities. Systems need to provide tools for diagnosing and resolving issues across different administrative domains.

In reality, multiple administrators often manage different aspects of a network, especially in large organizations or collaborative environments. This complexity requires robust tools for diagnosing and resolving issues, as well as careful planning for upgrades and interoperability.

Transport Cost is Zero

Definition: The assumption that data transport is free in terms of resources and financial cost.

Reality: Transporting data incurs costs, including computational resources for data serialization and financial expenses for network infrastructure. Efficient data handling and cost-effective solutions are necessary.

Transport costs, both in terms of computational resources and financial expenses, are often overlooked. Marshaling data for transport and maintaining network infrastructure incurs significant costs. Efficient data serialization and cost-effective network solutions are essential to manage these expenses.

The Network is Homogeneous

Definition: The belief that the network consists of uniform devices and protocols.

Reality: Networks are heterogeneous, comprising various devices, operating systems, and protocols. Designing for interoperability using standard technologies is essential to manage this diversity.

Networks are inherently heterogeneous, comprising various devices, operating systems, and protocols. Designing for interoperability from the outset, using standard technologies like XML and Web Services, can help mitigate the challenges posed by this diversity.

Key Concepts

Network Reliability

- Definition: The assumption that the network will always function without failures.

- Reality: Networks can fail due to hardware issues, software bugs, power outages, and human errors. Redundancy and error-handling mechanisms are crucial to mitigate these risks.

Latency

- Definition: The time it takes for data to travel from one point to another.

- Reality: Latency can significantly impact performance, especially over long distances. Systems must be designed to minimize remote calls and optimize data transfer.

Bandwidth

- Definition: The capacity for data transfer within a network.

- Reality: Bandwidth is limited and can be constrained by factors like packet loss and frame size. Applications need to be efficient in their data usage to avoid bottlenecks.

Network Security

- Definition: The belief that the network is inherently secure from threats.

- Reality: Networks are vulnerable to various security threats, including attacks and unauthorized access. Security must be built into the system from the beginning, with multi-layered defenses and continuous monitoring.

Network Topology

- Definition: The arrangement of different elements (links, nodes, etc.) in a computer network.

- Reality: Network topology is dynamic, with changes in server locations, client connections, and routing paths. Applications should be designed to handle these changes gracefully.

Administrator

- Definition: The person responsible for managing and maintaining a network.

- Reality: Large networks often have multiple administrators with different responsibilities. Systems need to provide tools for diagnosing and resolving issues across different administrative domains.

Transport Cost

- Definition: The resources and financial expenses associated with data transport.

- Reality: Transporting data incurs costs, including computational resources for data serialization and financial expenses for network infrastructure. Efficient data handling and cost-effective solutions are necessary.

Network Homogeneity

- Definition: The belief that the network consists of uniform devices and protocols.

- Reality: Networks are heterogeneous, comprising various devices, operating systems, and protocols. Designing for interoperability using standard technologies is essential to manage this diversity.

Redundancy

- Definition: The inclusion of extra components that are not strictly necessary to functioning, in case of failure in other components.

- Reality: Redundancy is crucial for ensuring network reliability and availability, especially in mission-critical applications.

Error-Handling Mechanisms

- Definition: Techniques used to manage and respond to errors in a system.

- Reality: Effective error-handling mechanisms are essential to maintain system stability and reliability in the face of network failures.

Interoperability

- Definition: The ability of different systems, devices, or applications to work together within a network.

- Reality: Interoperability is crucial in heterogeneous networks and can be achieved through the use of standard technologies like XML and Web Services.

Marshaling

- Definition: The process of gathering and arranging data into a format suitable for transmission over a network.

- Reality: Marshaling incurs computational costs and adds to latency, making efficient data serialization important.

Discovery Services

- Definition: Services that help locate network resources and endpoints.

- Reality: Discovery services are essential for handling dynamic network topologies and ensuring that applications can adapt to changes.

@article{rotem2006fallacies,

title={Fallacies of distributed computing explained},

author={Rotem-Gal-Oz, Arnon},

journal={URL http://www. rgoarchitects. com/Files/fallacies. pdf},

volume={20},

year={2006}

}