The core intuition behind logical time in distributed systems is to provide a way to capture and reason about the causal relationships between events without relying on physical clocks, which can be unreliable or unsynchronized in a distributed environment.

Capturing Causality

Logical time aims to establish a consistent ordering of events based on their causal dependencies. This is crucial because, in distributed systems, events occur asynchronously, and physical clocks may not be perfectly synchronized across different processes.

Monotonicity Property

Logical clocks ensure that if an event (A) causally affects an event (B), then the logical timestamp of (A) will be less than the logical timestamp of (B). This monotonicity property helps maintain the causal order of events.

Types of Logical Clocks

- Scalar Time: Uses a single integer to represent time. Each process increments its clock with each event and updates it based on received messages. This method is simple but does not capture all causal relationships.

- Vector Time: Uses a vector of integers, where each element represents the logical time of a process. This method captures causal dependencies more accurately and provides strong consistency.

- Matrix Time: Uses an (n x n) matrix to represent the logical time, capturing both direct and indirect causal dependencies. This method provides a comprehensive view of the system's state but is more complex.

Practical Applications

Logical time is used in various distributed system applications, such as:

- Ensuring liveness and fairness in mutual exclusion algorithms.

- Maintaining consistency in replicated databases.

- Designing deadlock detection algorithms.

- Constructing consistent states for distributed debugging and failure recovery.

Efficiency and Optimization

To reduce the overhead associated with logical clocks, several optimization techniques have been proposed, such as differential techniques, direct-dependency techniques, and adaptive techniques. These methods help manage the complexity and communication costs while maintaining the ability to capture causal relationships.

Logical clocks provide a robust framework for capturing causality in distributed systems, enabling the design of reliable and efficient distributed algorithms. While scalar clocks offer simplicity, vector and matrix clocks provide detailed causal tracking at the cost of increased complexity. Efficient implementation techniques help mitigate these costs, making logical clocks a versatile tool in the realm of distributed computing.

In distributed systems, capturing the causality between events is crucial for designing and analyzing parallel and distributed computing systems.

Causality in Distributed Systems

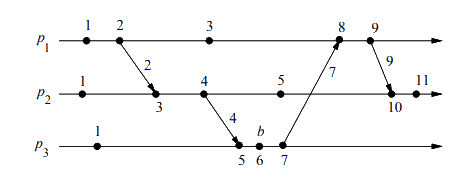

A distributed computation involves multiple processes that cooperate and compete to achieve a common goal. These processes communicate by passing messages over a network with finite but unpredictable delays. The actions of a process are modeled as internal events, message send events, and message receive events. The causal precedence relation among these events induces a partial order, which is fundamental for reasoning about the computation.

Logical Clocks

Logical clocks are mechanisms designed to capture the causality between events in a distributed system. They provide a way to order events based on their causal relationships rather than physical time, which is not always reliable in distributed settings. The article discusses three main types of logical clocks: scalar time, vector time, and matrix time.

Scalar Time

Scalar time, proposed by Lamport, uses non-negative integers to represent time. Each process maintains a local logical clock, which is incremented with each event. When a message is sent, the current clock value is piggybacked on the message. Upon receiving a message, a process updates its clock to the maximum of its current value and the received value, ensuring the consistency of event ordering.

Properties:

- Ensures a total order of events.

- Simple to implement but does not capture all causal relationships.

Vector Time

Vector time extends scalar time by using a vector of non-negative integers, where each element represents the logical time of a process. Each process maintains a vector clock, updating its own element with each event and incorporating the vector clocks of received messages.

Properties:

- Captures causal dependencies exactly.

- Provides strong consistency, allowing determination of causal relationships between events.

- More complex and requires more storage and communication overhead.

Matrix Time

Matrix time further extends vector time by using an (n x n) matrix, where each element represents the knowledge of one process about the logical time of another process. This method provides a comprehensive view of the system's state, capturing both direct and indirect causal dependencies.

Properties:

- Offers detailed tracking of causal relationships.

- Useful for applications requiring precise knowledge of event dependencies.

- High complexity and overhead, making it less practical for large systems.

Efficient Implementations

Given the overhead associated with vector and matrix clocks, several techniques have been proposed to optimize their implementation:

- Singhal-Kshemkalyani's Differential Technique: Reduces communication overhead by piggybacking only the changed entries of the vector clock since the last interaction.

- Fowler-Zwaenepoel's Direct-Dependency Technique: Maintains only direct dependencies, constructing vector timestamps offline through recursive searches.

- Jard-Jourdan's Adaptive Technique: Observes events adaptively, maintaining the ability to retrieve all causal dependencies while reducing the need to record every event.

Applications of Logical Clocks

Logical clocks are instrumental in various distributed system applications, including:

- Distributed Algorithms Design: Ensuring liveness and fairness in mutual exclusion algorithms, maintaining consistency in replicated databases, and designing correct deadlock detection algorithms.

- Tracking Dependent Events: Constructing consistent states for distributed debugging, failure recovery, and checkpointing in replicated databases.

- Concurrency Measurement: Measuring the amount of concurrency in a computation by analyzing causal dependencies.

Key Terms

Causality

The relationship between events where one event (the cause) leads to another event (the effect). In distributed systems, causality helps in understanding the sequence and dependencies of events.

Distributed System

A network of independent computers that work together to achieve a common goal. These systems do not share a global memory and communicate by passing messages.

Logical Clock

A mechanism to order events in a distributed system based on their causal relationships rather than physical time. Logical clocks help in capturing the causality between events.

Scalar Time

A type of logical clock proposed by Lamport, where time is represented by non-negative integers. Each process maintains a local clock that is incremented with each event.

Vector Time

An extension of scalar time, where time is represented by a vector of non-negative integers. Each element in the vector corresponds to the logical time of a process, capturing causal dependencies more accurately.

Matrix Time

A further extension of vector time, where time is represented by an (n x n) matrix. Each element in the matrix represents the knowledge of one process about the logical time of another process, providing a comprehensive view of the system's state.

Event

An action that occurs at a process in a distributed system. Events can be internal (affecting only the process), message send events, or message receive events.

Message Passing

The method by which processes in a distributed system communicate. Messages are sent and received over a network, and the delay is finite but unpredictable.

Causal Precedence

A partial order on the events of a distributed computation, indicating which events causally affect others. This relation is fundamental for reasoning about the computation.

Consistency

The property that ensures the logical clock values respect the causal relationships between events. For example, if event A causally affects event B, the logical clock value of A should be less than that of B.

Differential Technique

An optimization technique for vector clocks that reduces communication overhead by piggybacking only the changed entries of the vector clock since the last interaction.

Direct-Dependency Technique

A technique that maintains only direct dependencies between processes, constructing vector timestamps offline through recursive searches.

Adaptive Technique

A method that observes events adaptively, maintaining the ability to retrieve all causal dependencies while reducing the need to record every event.

Mutual Exclusion

A property of distributed systems ensuring that multiple processes do not enter their critical sections simultaneously, preventing conflicts and ensuring data consistency.

Deadlock Detection

The process of identifying a situation where a set of processes are blocked, each waiting for an event that only another process in the set can cause. Logical clocks help in designing algorithms to detect and resolve deadlocks.

Checkpointing

The process of saving the state of a process at certain points to facilitate recovery in case of failures. Logical clocks help in ensuring consistency of checkpoints across processes.

Concurrency

The property of a system where multiple events or processes can occur simultaneously without interfering with each other. Logical clocks help in measuring and managing concurrency in distributed systems.

@techreport{raynal1995march,

title={March. Logical time: A way to capture causality in distributed systems},

author={Raynal, M and Singhal, M},

year={1995},

institution={Technical Report RR-2472, INRIA-Rennes}

}