Datasets with version history allow for analysis of data at each stage. Therefore, the results of queries also change with respect to time. If such a version control capability were added, the best solution would have minimal impact to database performance.

1. What is the problem?

As Data Scientists frequently transform their data, very few systems are put in place to store each step reproducibly and query across versions. A solution is needed that allows for advanced query capability, as well as versioning, in a production database. Instead of creating a new solution and requiring users to migrate, existing databases should be made to support versioning for data analytics.

2. Why is it important?

The database needs to perform as if it is unaware of versioning except when computing aggregate statistics. Certain datasets are rapidly evolving, but analysis needs to be performed on a specific version before being modified and checked back in (or branched). These branched versions need to be explored, modified, and merged back in for global statics and queries. While linear versioning offers some ability to roll back, branching allows for permutations to be explored as the interactions of the data evolves.

3. Why is it hard?

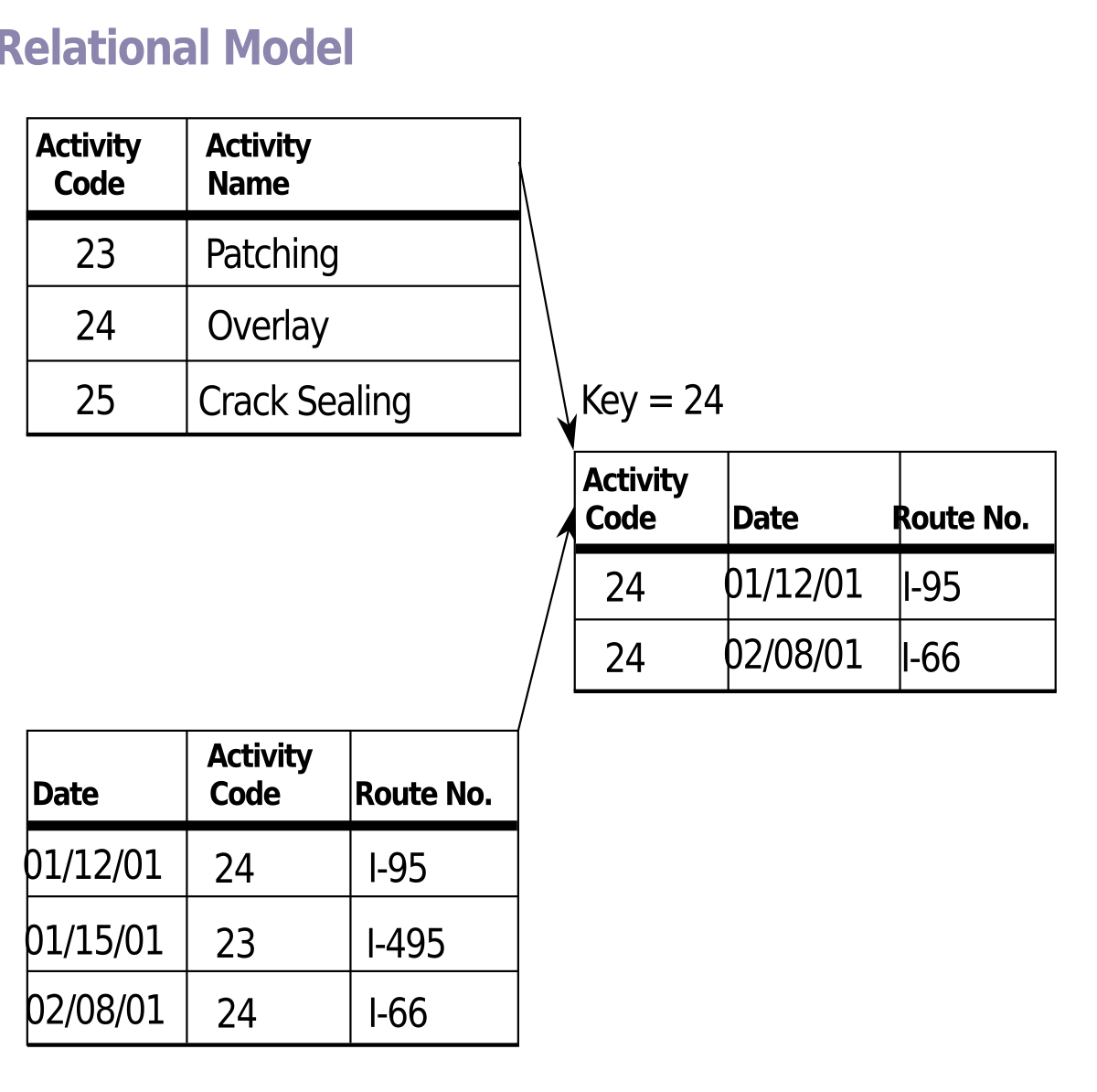

There are many considerations in the design of capturing versions including representation scheme, datamodel, and the balancing of storage costs and query costs. For example, adding a version number to an entry will get repeated with each version that it belongs to. If this number were stored in an array, it prevents the array from being repeated, but would then require each array to be modified with each version. Adding a time stamp is not sufficient either as it does not support branching within version control. An array of records, vs versions, will allow for easy insertion of new versions, but has the overhead of joining the version table with the data table.

4. Why existing solutions do not work?

Source code version control (like git or SVN) does not allow for dataset queries. Storing data on a network file system requires meaningful naming conventions or directory structure. To maintain query-ability, data can be stored in alternate tables within the database, but this leads to redundancy and manual moderation. If one resorts to storing the most recent version, there is no ability to trace back to the original datasets. Temporal databases do not support branching, merging, and analytics of such representations.

5. What is the core intuition for the solution?

In terms of storage and query latencies, join operations are the driver. To reduce joins, partitioning can be used to recreate versions, but this increases storage cost. The authors have developed an algorithm called LyreSplit that incrementally maintains partitions to balance the trade-offs of storage and retrieval time based on the complexity of the version graph. This is shown to minimize the time taken in a migration by 10x, and is 1000x faster than competing algorithms.

6. Does the paper prove its claims?

This paper adequately describes the architecture of the Orpheus system and the exploration of each design decision. They authors describe and implement multiple designs of a collaborative versioned dataset (CVD). The design space analyzed in these approaches reaches the asymptotes of storage and speed. Therefore, reaching a final design in the middle helps prove the claim that an optimal balance has been reached.

The authors first implement the version attribute approach using PostgreSQL. They demonstrate that adding versions can be expensive with large numbers of records.

In a second implementation, the authors separate the version information from the data information. This implementation has overhead caused by join operations when checking out the data.

The third implementation stores the records present in a particular version, with version id as the primary key. This eliminates expensive array operations, but needs to recreate versions when performing advanced queries.

The fourth implementation is a delta-based model in which each version records modifications in a separate table. A checkout traces the version lineage back to the root, however deltas with multiple parents become complicated.

The fifth and final implementation creates a table per version - which duplicates many records. This is impractical due to excessive storage - it was done just for comparison.

7. What is the setup of analysis/experiments? is it sufficient?

To test each analysis, the authors use record sets ranging from 1M to 8M entries. In each experiment, they check out the latest version and then commit back the materialized data as a new version. From this, storage size is measured (including indexes not returned by joins) as well as the transaction time (checkout and commit). 30% of the records were modified by the commit. Performing all these tests, they conclude that the third implementation was preferable as it most efficiently supports advanced queries. However, given the asymptotic read performance of the fifth approach, the authors propose a hybrid approach as a final solution.

LyreSplit was evaluated using the datasets from Maddox in which a branch is still a working copy of the dataset. The Science and Curation (SCI and CUR) workloads were selected for their real-world similarities. Tests were run on a Xeon E3-1240 CPU with 16 GB memory. Based on the size predictions, this is sufficient hardware for the database to run entirely in memory.

8. Are there any gaps in the logic/proof?

Approach 2, split by vlist, has a higher than expected Checkout time for the 5M recordset. This is not addressed. As a larger critique, the authors should have performed multiple runs for each configuration and provided a box plot in the report. This would help alleviate concerns with a result that does not trend with the rest of the results. Additionally, checkout time would be more meaningfully plotted on a log scale.

While this paper discusses the space between two asymptotic designs, it never discusses the asymtotes directly.

9. Describe at least one possible next step.

Git has the concept of commit and push. So far OrpheusDB only acts as a local Git node. A next step would be to push multiple commits to a remote master or branch head.

Another topic of exploration is the merge policies. How the database reconciles merge conflicts is a major consideration for a team going to production.

BibTex Citation

@article{huang2017rpheus,

title={Orpheus DB: bolt-on versioning for relational databases},

author={Huang, Silu and Xu, Liqi and Liu, Jialin and Elmore, Aaron J and Parameswaran, Aditya},

journal={Proceedings of the VLDB Endowment},

volume={10},

number={10},

pages={1130--1141},

year={2017},

publisher={VLDB Endowment}

}