Recently, a colleague and I were discussing how to get the customer on board with tracking code coverage as a performance metric with respect to unit testing.

While we both know that good code coverage usually means a better product and that Test Driven Development is being performed, how can you explain that effectively to a customer?

Note: Some of the names and specifics have been changed

The following are the highlights of this conversation...

Hey,

I am writing something to the customer on code coverage and why we should us it

better language than "it is the right thing to do and reduces bugs"

|

It is definitely software engineering best practice

|

|

|

maybe "code coverage performs analysis to direct quality testing"

|

|

what should I call code coverage

Here are some common metrics:

- Function coverage is a measure how many of the functions/methods (of those defined) have been called.

- Statement coverage is how many of the statements in the program have been executed.

- Decision coverage is how many true/false outcomes are tested.

- Branches coverage is how many of the branches of the control structures (if statements for instance) have been executed.

- Condition coverage is how many of the logical expressions (which often contain multiple parts) have been tested for a true and a false value.

- Line coverage is how many of lines of source code have been tested.

|

|

|

I think we record "line coverage" if you are looking to be more specific

|

|

|

The industry goal is to get around 80% line coverage

|

|

what does it buy us in the clients eyes

|

reduces risk, increases RoI (ensures backlog is new features and not bug fixes)

|

|

|

Code coverage provides a guarantee that the covered portion of the software will function as intended prior to acceptance testing by a live user.

|

|

why do we care if our scan says we have 0% code coverage or 100%

|

If it says 0, there is 0 guarantee the software functions as intended once it leaves the development team

|

|

Is there something like that, that applies to code coverage?

|

Code coverage is a metric for your testing maturity/rigor

|

|

is there a fancy word though

I am looking for a term like DevOps

|

Test Driven Development

|

|

|

Requirements Tracability

|

|

|

Another one might be "Acceptance Criteria" to go to user-based testing

For example, if I said "test this" wouldn't you ask "Chris, did you even try it?"

|

|

In summary,

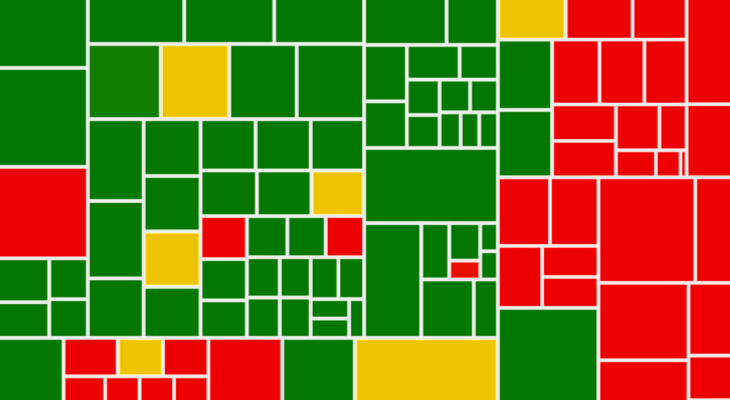

code coverage is this percentage of our application called when performing automated tests

It should tie back to requirements but doesn't have to. A lot depends on that, such as level of requirements.

I will say "Increased code coverage metrics ensure target code quality."

While code coverage is a great way to ensure your product works as intended, it does not guarantee that it meets the customer's functional requirements.

Function testing is less automated, typically, but is more often used as acceptance testing for the customer.

|

Code Coverage |

Functional Coverage |

| Definition: |

Code coverage tells you how much of the source code has been executed by automated testing. |

Functional coverage measures how well the functionality of the design has been covered when performing testing. |

| Relationship to Design Document: |

Sometimes references the design specification |

Is directly correlated to the design specification |

| Who performs it: |

Done by developers |

Done by Testers |

For a developer, code coverage helps to determine what additional tests should be written.

It also finds areas of the program that have an inconsistent level of maturity.

Above all, when all unit tests pass and code coverage is high, the developer feels confident that the product is ready to move forward in the software pipeline.