Consensus algorithms are crucial for building reliable distributed systems. While Paxos has been the go-to solution for many years, Raft has gained popularity due to its simpler design and easier implementation. Understanding these algorithms is essential for anyone working with distributed systems or building fault-tolerant applications.

The Need for Consensus

Consensus algorithms allow a group of processes in a distributed system to agree on a shared state. This is crucial for maintaining consistency across the system, especially in the face of failures or network partitions.

Paxos: The Classic Consensus Algorithm

Paxos, developed by Leslie Lamport in 1989, is one of the most well-known consensus algorithms. Despite its proven effectiveness, Paxos has a reputation for being difficult to understand and implement.

Key Components of Paxos:

- Proposers: Nodes that propose values

- Acceptors: Nodes that evaluate and decide on proposals

- Learners: Nodes that need to learn the agreed-upon value

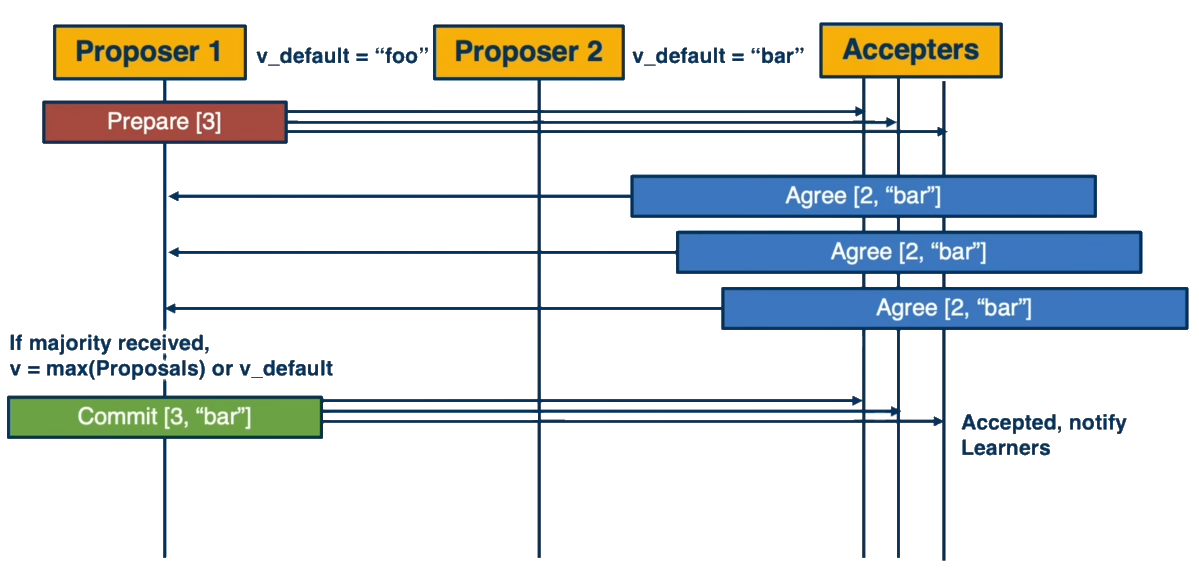

Paxos Protocol Phases:

- Prepare Phase: A proposer sends a prepare request with a proposal number.

- Accept Phase: If a majority of acceptors respond positively, the proposer sends an accept request.

- Learn Phase: Acceptors inform learners of the accepted value.

Paxos guarantees safety (only proposed values are chosen, and only a single value is agreed upon) but does not guarantee liveness (progress is not always guaranteed).

Raft: A More Understandable Consensus Algorithm

Raft was developed in 2014 by Diego Ongaro and John Ousterhout as a more understandable alternative to Paxos.

Key Features of Raft:

- Leader Election: A distinct phase for electing a leader

- Log Replication: The leader manages log replication among followers

- Safety: Guarantees log consistency across nodes

Raft Protocol Phases:

- Leader Election: Nodes vote to elect a leader

- Normal Operation (Log Replication): The leader accepts client requests and replicates log entries

Raft simplifies the consensus process by clearly separating concerns and introducing the concept of terms (similar to views in other protocols).

Comparing Paxos and Raft

While both algorithms solve the consensus problem, they differ in their approach:

- Complexity: Raft is designed to be more understandable and easier to implement

- Leadership: Raft has a strong leader model, while Paxos allows for multiple proposers

- Understandability: Studies have shown that students tend to understand Raft more easily than Paxos

Real-world Applications

Both Paxos and Raft have been implemented in various production systems:

- Paxos: Google's Chubby lock service, Apache ZooKeeper

- Raft: etcd, Consul, TiKV

Key Concepts

Consensus: The process by which a group of nodes in a distributed system agree on a single value or state.

Distributed System: A network of independent computers that appear to users as a single coherent system.

Safety: The property that ensures nothing bad ever happens in the system (e.g., two nodes never disagree on a chosen value).

Liveness: The property that ensures something good eventually happens (e.g., the system eventually reaches a decision).

Quorum: The minimum number of nodes that must participate in a vote for the vote to be considered valid, typically a majority.

State Machine Replication: A technique where each node in a distributed system is modeled as a state machine, and all nodes process the same sequence of commands to maintain consistency.

View: In viewstamped replication, a configuration of the system with an assigned leader.

Snapshot: A point-in-time copy of the system's state, used to optimize log management and node recovery.

Log Compaction: The process of reducing the size of the replicated log by discarding old entries that are no longer needed.

Byzantine Fault Tolerance: The ability of a system to function correctly even when some nodes fail in arbitrary or malicious ways (not addressed by Paxos or Raft).

Paxos-specific Concepts

Proposer: A node in Paxos that proposes values to be agreed upon.

Acceptor: A node in Paxos that decides whether to accept proposed values.

Learner: A node in Paxos that learns and stores the agreed-upon values.

Proposal Number: A unique identifier for each proposal in Paxos, used to order proposals.

Prepare Phase: The first phase of Paxos where a proposer asks acceptors to promise not to accept lower-numbered proposals.

Accept Phase: The second phase of Paxos where a proposer asks acceptors to accept a specific value.

Multi-Paxos: An optimization of Paxos for agreeing on a sequence of values, often using a stable leader.

Raft-specific Concepts

Leader: The node responsible for handling all client requests and managing replication in Raft.

Follower: A passive node in Raft that responds to requests from leaders and candidates.

Candidate: A node in Raft that is competing to become a leader during an election.

Term: A logical clock in Raft that represents a period with a single leader.

Log Entry: A record in Raft containing a client command and the term when the entry was received by the leader.

Log Replication: The process in Raft where the leader replicates its log entries to followers.

Leader Election: The process in Raft where nodes vote to select a new leader.

Heartbeat: A periodic message sent by the Raft leader to maintain its authority and prevent new elections.

Review Questions

What are the main ideas for 2PC and 3PC?

2PC (Two-Phase Commit):

- Uses a coordinator

- Assumes coordinator won't fail

- Coordinator proposes value, participants vote, coordinator tallies and communicates decision

- Simple but blocks if failures occur, does not guarantee liveness

3PC (Three-Phase Commit):

- Addresses blocking problem of 2PC

- Has pre-prepare, prepare, and decision phases

- Guarantees liveness but only works with fail-stop mode

- Issues with safety if nodes restart or delayed messages are delivered later

Provide some history behind PAXOS

- Originally written by Leslie Lamport in 1990

- Not published until 1998 due to reviewers not appreciating the humorous description

- Original paper described the algorithm using fictional Greek parliamentarians on Paxos island

- In 2001, Lamport wrote "Paxos Made Simple" to make the algorithm more approachable

Description of PAXOS? What's expected from the system (the system model) for the protocol to hold?

- Designed for systems with asynchronous communication and non-Byzantine process failures

- Agents operate at arbitrary speed, may fail by stopping and restart

- Participants have persistent memory to remember information after restarting

- Messages can take arbitrarily long to be delivered, can be duplicated, lost, or reordered, but cannot be corrupted

What is hard about determining whom to follow?

Determining leadership in distributed systems is challenging due to asynchronous communication, potential failures, and the need for consensus among participants.

Main ideas that PAXOS assumes: state-machine replication, majority quorum, …

- State machine replication: Each node is a replica of a state machine following the same rules for state updates

- Majority quorum: Decisions based on majority agreement, ensuring two quorums always intersect

- Heavy reliance on time steps (e.g., Lamport logical clocks) to order events and tolerate arbitrary message delays

Goal and description of the phases in Paxos

Reach consensus on a single value among distributed nodes.

Phases:

- Prepare phase: Proposer sends prepare request with proposal number to acceptors

- Accept phase: If proposer receives majority agreement, it sends accept request to acceptors

- Learn phase: Acceptors communicate decided value to learners

What is the functionality Paxos provides? Why we need Multi-Paxos?

Paxos provides consensus on a single value

Multi-Paxos is needed for agreeing on sequences of values, which is more practical for real-world applications (e.g., database updates, application state changes)

Motivation for Raft, key idea and differences than Paxos

Motivation: Improve understandability of consensus algorithms

Key idea: Separate leader election from log replication phases

Differences from Paxos:

- Distinct leader election phase

- More straightforward log comparison and management

- Designed for better understandability and easier implementation

Log management in Raft - how is info used to update, discard entries, trim/garbage collection?

Logs are append-only

- Log matching property: If two entries in different logs share the same index and term, all previous entries are the same

- New leaders force other servers to use their log

- Uncommitted entries from previous terms may be discarded

- Periodic log truncation using snapshots for efficiency

- Leaders can send snapshots to help lagging nodes catch up quickly