Distributed transactions and systems like Google's Spanner represent the cutting edge of data management technology. They enable companies to operate at a truly global scale while maintaining data consistency and reliability.

In today's interconnected world, companies like Google and Facebook serve billions of users across the globe. To manage such massive amounts of data efficiently, they rely on sophisticated distributed data management systems. One such system, developed by Google, is called Spanner.

Understanding Distributed Transactions

A distributed transaction is like a regular transaction, but it is executed across multiple nodes in a network. The challenge lies in ensuring that the ACID properties (Atomicity, Consistency, Isolation, Durability) are maintained across these distributed nodes.

Google's Spanner

Spanner, introduced by Google in 2012, is a global-scale distributed database management system. It is not just a research project; it is used in production at Google for critical services like Google Ads and Google Play. Spanner allows applications to use familiar SQL queries while scaling data management to a global level.

Key Features of Spanner:

- Global Distribution: Data is spread across multiple data centers worldwide.

- Sharding: Within each location, data is partitioned (or "sharded") across thousands of servers.

- Replication: Data is replicated across multiple sites for fault tolerance and availability.

TrueTime

One of Spanner's most innovative features is its use of TrueTime. Traditional distributed systems struggle with clock synchronization, which can lead to inconsistencies. TrueTime is Google's solution to this problem.

TrueTime does not give an exact time; instead, it provides an uncertainty interval around the real time. This interval tells us the earliest and latest possible real times at any given moment. By using TrueTime, Spanner can reason about the uncertainty in clock readings across the system.

How Spanner Handles Transactions

Spanner uses a combination of techniques to manage distributed transactions:

- Pessimistic Locking: Spanner acquires all necessary locks upfront, anticipating potential conflicts.

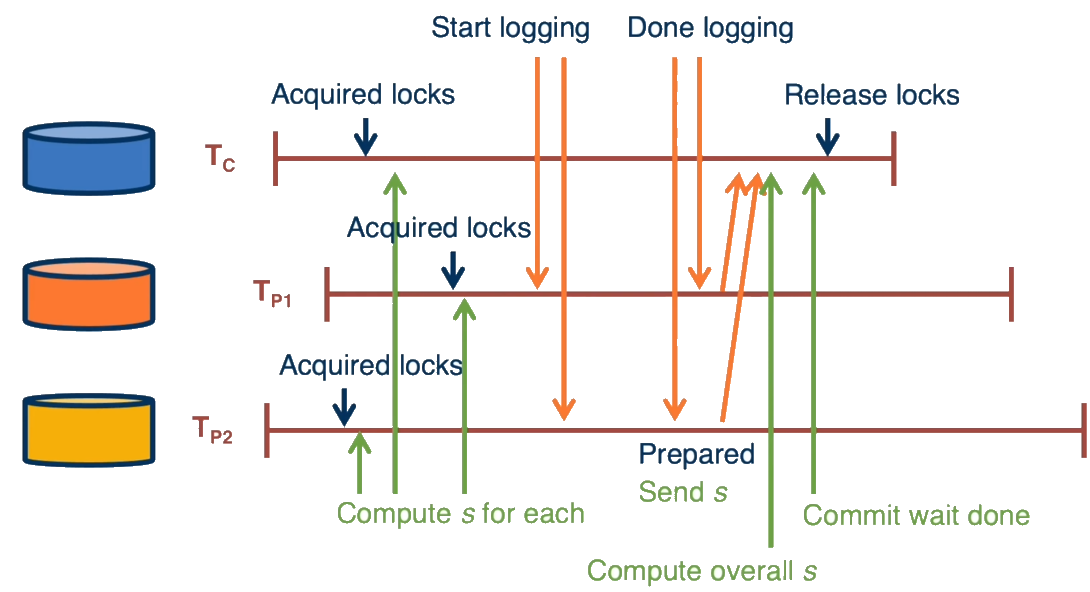

- Two-Phase Commit: For transactions spanning multiple replica sets, Spanner uses a two-phase commit protocol.

- Paxos Consensus: Within each replica set, Paxos is used to maintain consistency.

The use of TrueTime allows Spanner to assign globally meaningful timestamps to transactions, ensuring that the order of transactions in the system matches their real-world order.

Read Transactions in Spanner

Spanner distinguishes between two types of read transactions:

- Read-now Transactions: These return the current value in the system but may be delayed to ensure consistency.

- Read-at-timestamp Transactions: These read values at a specific timestamp in the past, which can be achieved more efficiently.

Alternatives to Spanner

While Spanner is a powerful system, it relies on specialized hardware (GPS and atomic clocks) for precise timekeeping. Other systems have emerged to provide similar functionality without these requirements:

- CockroachDB: Built by ex-Google engineers, it aims to provide Spanner-like benefits without the need for specialized hardware.

- Amazon Aurora: Uses a primary-replica architecture and focuses on availability even under large-scale failures.

Key Concepts

Distributed Transaction: A transaction that is executed across multiple nodes in a network, while still maintaining ACID properties.

ACID Properties:

- Atomicity: All operations in a transaction succeed, or none of them do.

- Consistency: The transaction brings the database from one valid state to another.

- Isolation: Concurrent transactions do not interfere with each other.

- Durability: Once a transaction is committed, it remains so.

Spanner: Google's globally distributed database management system that provides scalable, multi-version, globally distributed, and synchronously replicated database.

Sharding: The practice of partitioning data across multiple servers to improve scalability and performance.

TrueTime: Google's time API that provides an uncertainty interval around the real time, allowing for reasoning about clock uncertainty across the system.

Pessimistic Locking: A concurrency control method where locks are acquired on data before performing any operations.

Two-Phase Commit (2PC): A distributed algorithm for coordinating all the processes that participate in a distributed atomic transaction.

Paxos Consensus: An algorithm for reaching consensus in a network of unreliable processors.

Read-now Transactions: In Spanner, these are read operations that return the current value in the system but may be delayed to ensure consistency.

Read-at-timestamp Transactions: In Spanner, these are read operations that retrieve data at a specific timestamp in the past.

Primary-Replica Architecture: A database architecture where one node (the primary) handles both read and write operations, while other nodes (replicas) only serve read traffic.

Quorum: The minimum number of votes that a distributed transaction has to obtain in order to be allowed to perform an operation in a distributed system.

IO Amplification: The phenomenon where a single I/O operation at the application level results in multiple I/O operations at the storage level.

External Consistency: A property ensuring that the order of transactions in the system matches their real-world order.

Global-scale Data Management: The practice of managing data across multiple geographically distributed data centers.

Review Questions

What is the concept of TrueTime? How is that related to the Logical Clocks discussed earlier in the semester? How can it be implemented?

TrueTime is a concept introduced by Google's Spanner system that provides a way to reason about uncertainty in time measurements across a distributed system. Unlike logical clocks, which only provide relative ordering of events, TrueTime attempts to tie events to real, physical time, but with an acknowledged uncertainty interval.

Key points about TrueTime:

- It returns an interval [earliest, latest] instead of a single timestamp.

- It allows reasoning about whether a given time has definitely passed or not yet arrived.

- It is implemented using a combination of GPS and atomic clocks in data centers.

- It involves periodic probing of master clock servers to compute an epsilon value capturing clock drift and uncertainty.

Relation to Logical Clocks: While logical clocks focus on establishing a partial ordering of events without reference to physical time, TrueTime aims to provide a global ordering that closely approximates real time, but with bounded uncertainty.

Implementation:

- Deploys GPS and atomic clocks in data centers.

- Uses fast networking between nodes.

- Involves periodic probing of master clock servers.

- Computes an epsilon value to represent the uncertainty interval.

- Achieves uncertainty intervals on the order of a few milliseconds.

How does the use of TrueTime change what is required from the system (Spanner) in order to establish ordering among transactions?

- Allowing the system to assign timestamps to transactions that approximate their real-time order, but with bounded uncertainty.

- Requiring transactions to wait out a "commit window" to ensure that their assigned timestamp is globally valid.

- Enabling the system to reason about external consistency (strict serializability) by tying transaction timestamps to an approximation of real time.

- Eliminating the need for a single global clock while still providing strong consistency guarantees.

Using TrueTime how do you establish ordering of write operations? How do you implement a read operation? What about reading a snapshot "in the past"?

Ordering write operations:

- Acquire all necessary locks upfront (pessimistic locking).

- Choose a TrueTime timestamp S that is guaranteed to be greater than any previously committed transaction.

- Execute the transaction and achieve consensus among replicas.

- Wait out the commit window to ensure S is less than any future transaction's timestamp.

- Release locks and notify participants of the chosen timestamp.

Implementing read operations:

-

For "read now" transactions:

- The leader determines a "safe" timestamp.

- May be delayed if there are prepared but not yet committed transactions.

-

For reading at a specific past timestamp:

- Leverage the timestamped nature of transactions.

- Return a consistent snapshot of the system at the specified timestamp without running a distributed cut algorithm.

Describe at a high-level Spanner, how does it organize and replicate data, who is involved in serving read or write operations...

Data organization and replication:

- Globally distributed across multiple geographic locations.

- Data partitioned (sharded) across thousands of servers at each location.

- Replicated across multiple sites for fault tolerance and availability.

Serving read/write operations:

- Uses a coordinator or leader to initiate transactions.

- Employs protocols like two-phase commit or Paxos for consensus.

- Utilizes TrueTime for timestamp assignment and ordering.

- Implements both local and distributed transactions.

-

Supports SQL queries for familiar application interaction.

Describe at a high-level Aurora, how does it organize and replicate data, who is involved in serving read or write operations...

Data organization and replication:

- Follows a primary-replica architecture.

- Replicates data to multiple zones, with each zone having two replicas.

- Uses a shared distributed storage layer across replicas.

Serving read/write operations:

- Single primary node handles both read and write operations.

- Other nodes serve only read traffic.

- Uses log replication instead of full data replication.

- Relies on quorum voting among replicas for correctness.

Can you explain/justify the differences in the two systems. You can consider a number of possible dimensions such as different design goals, underlying assumptions, performance, ...

Differences between Spanner and Aurora:

Design goals:

- Spanner: Global scale, strong consistency, support for distributed transactions.

- Aurora: High availability, fault tolerance, optimized for read-heavy workloads.

Underlying assumptions:

- Spanner: Assumes ability to implement TrueTime (GPS and atomic clocks).

- Aurora: Assumes shared distributed storage layer.

Performance considerations:

- Spanner: May introduce latency due to commit wait times.

- Aurora: Optimizes for reduced I/O amplification.

Consistency model:

- Spanner: Provides external consistency (strict serializability).

- Aurora: Offers eventual consistency with options for stronger guarantees.

Scalability:

- Spanner: Designed for global scale-out.

- Aurora: Focuses on scale-up within regions/zones.

What are some other design points that can be considered in systems which do not have TT?

- Use of logical clocks or hybrid logical clocks for event ordering.

- Implementing vector clocks for capturing causality.

- Employing optimistic concurrency control mechanisms.

- Using snapshot isolation or multi-version concurrency control (MVCC).

- Implementing distributed consensus algorithms like Paxos or Raft.

- Providing configurable consistency levels (e.g., CockroachDB's linearizability flag).

- Using primary-replica architectures to localize write operations.

- Implementing timestamp ordering protocols without relying on synchronized physical clocks.

These alternative approaches allow systems to provide various levels of consistency and performance trade-offs without the need for the specialized hardware and precise time synchronization required by TrueTime.